LinXter: An Overview

by Sachin Dharmapurikar

Note: This is just an introduction of LinXter architecture as a whole for the purpose of briefing by the developers and interested persons. The extensive description of each part of the LinXter will be released soon.

LinXter is a cluster which is based on Linux operating system. In this articles we will take an overview of LinXter architecture. A cluster is an group of computers called nodes which are interconnected together and make an illusion that they are one computer. When number of nodes are increased the computational power of the cluster also increase but the performance increase is not linear. LinXter is an simple cluster implementation in which few changes to existing cluster architecture are suggested and soon will be implemented.

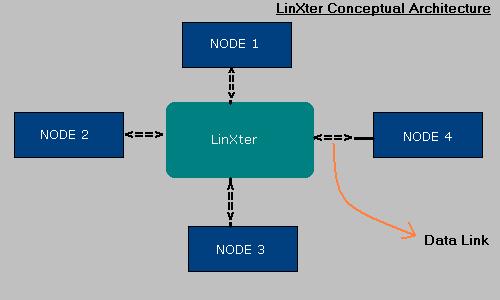

Fig: LinXter Conceptual architecture

As we see in architecture there are three things are highlighted:

- LinXter: LinXter is a cluster management system you may also call it as cluster middleware which governs all operations of the nodes. It is the backbone of the cluster which is responsible for IPC, Scheduling of jobs, managing process and virtual memory management.

- Data Link: This is and ethernet links whose bandwidth is in gigabits/s. Such a high bandwidth is necessary for the efficient communication.

- Node: Nodes are the normal PCs or any other computer

e.g. workstation, server etc. No matter which type of computer it is it

is just added as a node in cluster. Every node should have following things:

Ethernet interface

LinXter OS

It should be operational with or without LinXter.

It is must that all nodes should belong to same family of architecture.

LinXter is having its main focus on following components:

- IPC

- Process Pool

- Virtual Memory Management

- Active and Passive Nodes

- Scheduling

- Connectivity between nodes

- Process Management

We are focusing on these issues because these are going to be attraction of LinXter we have not yet decided on following issues but soon those things also will be standardized.

- Filesystem

- Connectivity between nodes: Every node is connected

through a ethernet interface. The bandwidth of this interface should be

very high for performance constrains. As these interface having analogy

to the data buses in CPU. As those buses are have very high bandwidth our

ethernet should also having high bandwidth.

As every node is defined by a unique number it is not hard to communicate with a particular node. This numbering system may anything ex. TCP/IP nomination system. There will not be any host-client or frontend-client structure in LinXter. Every node will be at same operating level and all necessary decisions will be taken by that node itself. This will employ the true concept of cluster, otherwise it will be simply host-client parallel computing and not clustering

We will also use message broadcasting in the LinXter as it will enable one-to-many communication possible. This will also enable us employing Dynamic Node Configuration. - Active and Passive Nodes: A new concept in LinXter

is introduced that it will employ Active and Passive nodes.

Active node is a special mode of operation of node in which the node is part of cluster as well as it is serving the request of its user. Simply it is sharing some resources with cluster such as some processing power.

Passive mode is mode of operation in which node dedicates all of its resources to the cluster and its user interfacing devices are disabled - Process Pool: Process pool is constructed by sharing

main memory or virtual memory from each node individually. Thus Process

pool is combination of main memory and virtual memory. As we discussed above

in LinXter every node is able to schedule and manage itself, this is a true

cluster. As every node is having same OS there will be uniformity in the

scheduling algorithms and management processes.

There is one more important thing about the process pool. Every process will be created as a packet (don't confuse this with Internet data packet) this packet will consist all necessary information about that process and necessary to run that process. This data may be processor registers, global data of process, who created the job, to whom the result is submitted etc. As there is a process queue there will be a node queue also. Whenever there will be a process in ready state in process pool the next node from ready node queue will fetch that process for execution. This process is very simple as there is no involvement of another node in the cluster. Since every node will decide which process he has to execute, schedule itself and unless any signal is received by that process it will execute. Thus in this system there will be no performance bottleneck or overhead to a particular system which normally happens in the client-server computing or few other clusters - Interprocess communication in the LinXter will be very simple and effective. All POSIX communication mechanisms will be implemented as it is in the LinXter. Signals, pipes, shared memory and all other facilities will be implemented. We are also planning to extend the IPC mechanism for the efficient network communication.

- Process management: It is another issue of major concern. In LinXter every process will be first submitted to the process pool and then only it will be assigned to a node. New or improved load balancing mechanisms will be used as those mechanisms are currently being standardized and soon we are going to release them. There will be locking mechanisms like mutex or spin locks will be provided to each process to ensure that no any two nodes are going to execute same process at any instance.

This was a brief introduction to LinXter and hope you will be having now a better understanding of LinXter. Since our documentation team is currently undergoing to release the next versions of documentation soon. If you are interested in involving this project it will be nice. We are keen to help you so that you can help us to construct a better future of LinXter.

Last updated: 7-01-2003

Contact: linxter@rediffmail.com